📖 Introduction

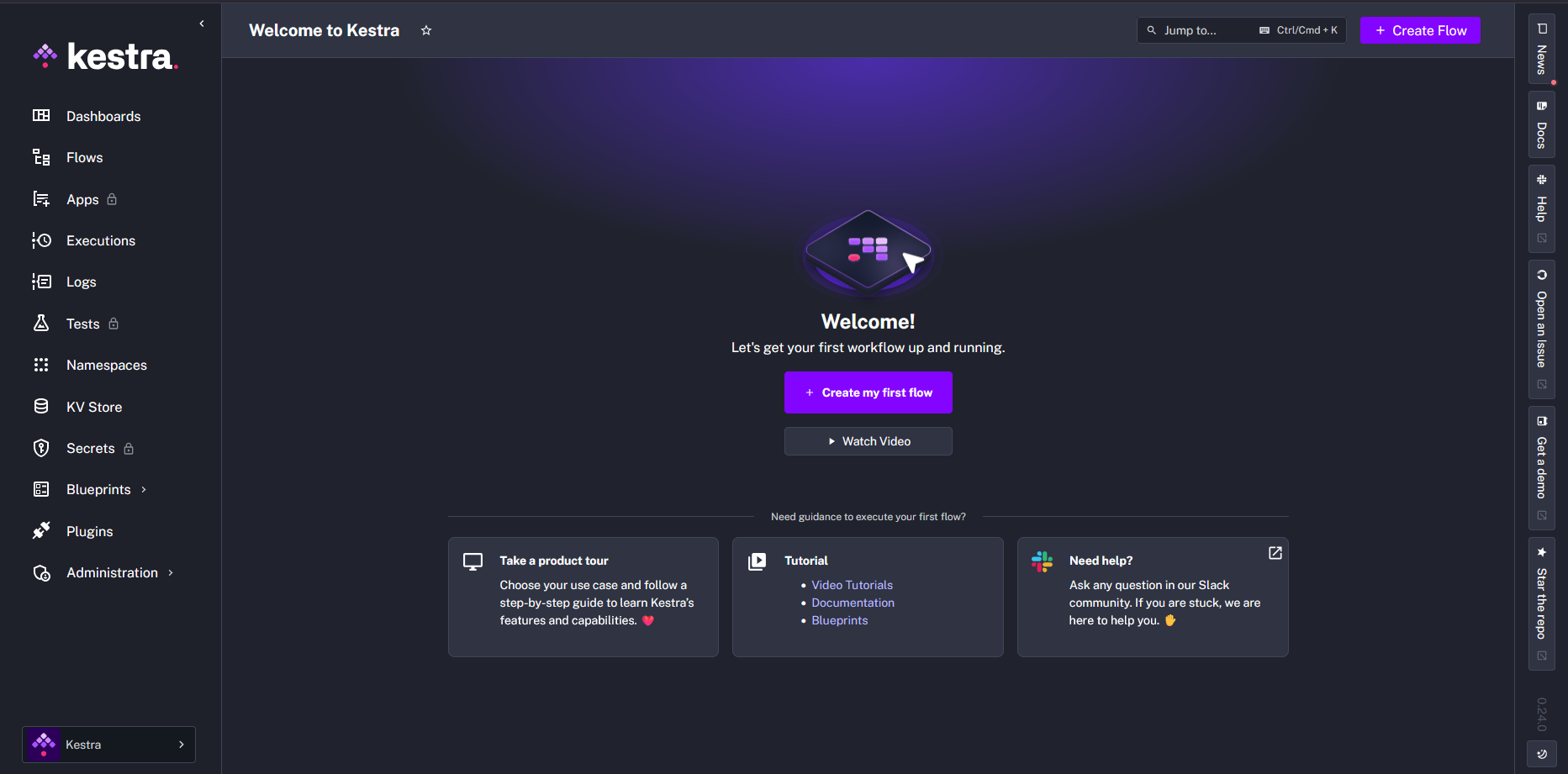

In modern data engineering and automation, orchestrating workflows is essential. Kestra is an open-source, declarative data orchestration platform that lets you build, schedule, and monitor workflows in a YAML-based format.

Think of Kestra as “automation meets DevOps” — you define tasks, connect them in sequence, and Kestra handles execution, scheduling, and monitoring. Whether you’re managing data pipelines, automating infrastructure, or orchestrating microservices, Kestra provides a powerful yet simple solution.

🔹 Why Use Kestra?

- YAML-based workflows – easy to read, version control friendly

- Beautiful UI – debug and monitor in real-time

- Multiple installation options – Docker, Kubernetes, standalone

- Scalable & cloud-native – from small jobs to enterprise pipelines

- Event-driven & scheduled – automate both scheduled and real-time event-driven workflows

- Rich plugin ecosystem – hundreds of plugins for databases, APIs, and more

- Language agnostic – run code in Python, Node.js, R, Go, Shell, and more

🛠️ Installation Methods

Kestra can be installed in various ways depending on your environment and requirements. Let’s explore the different installation methods.

Method 1: Docker Installation

Prerequisites

- Docker installed

- Internet connection

Single Container Setup

The fastest way to get started with Kestra is using a single Docker container:

1

2

3

| docker run --pull=always --rm -it -p 8080:8080 --user=root \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /tmp:/tmp kestra/kestra:latest server local

|

For Windows PowerShell:

1

2

3

| docker run --pull=always --rm -it -p 8080:8080 --user=root `

-v "/var/run/docker.sock:/var/run/docker.sock" `

-v "C:/Temp:/tmp" kestra/kestra:latest server local

|

Docker Compose Setup (Recommended)

For a more production-ready setup, use Docker Compose:

- Create a

docker-compose.yml file:

1

2

3

4

5

6

7

8

9

10

11

12

13

| services:

kestra:

image: kestra/kestra:latest

container_name: kestra

pull_policy: always

ports:

- "8080:8080"

user: root

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /tmp:/tmp

command: server local

restart: unless-stopped

|

📜 Show Advanced YAML

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

| volumes:

postgres-data:

driver: local

kestra-data:

driver: local

services:

postgres:

image: postgres

volumes:

- postgres-data:/var/lib/postgresql/data

environment:

POSTGRES_DB: kestra

POSTGRES_USER: kestra

POSTGRES_PASSWORD: k3str4

healthcheck:

test: ["CMD-SHELL", "pg_isready -d $${POSTGRES_DB} -U $${POSTGRES_USER}"]

interval: 30s

timeout: 10s

retries: 10

kestra:

image: kestra/kestra:latest

pull_policy: always

user: "root"

command: server standalone

volumes:

- kestra-data:/app/storage

- /var/run/docker.sock:/var/run/docker.sock

- /tmp/kestra-wd:/tmp/kestra-wd

environment:

KESTRA_CONFIGURATION: |

datasources:

postgres:

url: jdbc:postgresql://postgres:5432/kestra

driverClassName: org.postgresql.Driver

username: kestra

password: k3str4

kestra:

repository:

type: postgres

storage:

type: local

local:

basePath: "/app/storage"

queue:

type: postgres

tasks:

tmpDir:

path: /tmp/kestra-wd/tmp

url: http://localhost:8080/

ports:

- "8080:8080"

- "8081:8081"

depends_on:

postgres:

condition: service_started

|

- Start Kestra:

- Check logs:

- Access the Kestra dashboard at

http://<your-server-ip>:8080

Method 2: Kubernetes Installation

For production environments, Kubernetes is recommended.

Prerequisites

- Kubernetes cluster

- Helm installed

Installation Steps

- Add the Kestra Helm repository:

1

| helm repo add kestra https://helm.kestra.io/

|

- Install Kestra using Helm:

1

| helm install kestra kestra/kestra

|

- For a custom configuration, create a

values.yaml file and apply it:

1

| helm upgrade kestra kestra/kestra -f values.yaml

|

- Get the pod name to access logs:

1

| export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/name=kestra,app.kubernetes.io/instance=kestra,app.kubernetes.io/component=standalone" -o jsonpath="{.items[0].metadata.name}")

|

Advanced Kubernetes Configuration

By default, the Helm chart deploys a standalone Kestra service. For a distributed setup, modify your values.yaml:

1

2

3

4

5

6

7

8

9

10

11

12

13

| deployments:

webserver:

enabled: true

executor:

enabled: true

indexer:

enabled: true

scheduler:

enabled: true

worker:

enabled: true

standalone:

enabled: false

|

You can also deploy related services:

1

2

3

4

5

6

7

8

9

10

11

| # Enable Kafka and Zookeeper

kafka:

enabled: true

# Enable Elasticsearch

elasticsearch:

enabled: true

# Enable PostgreSQL

postgresql:

enabled: true

|

Method 3: Standalone Server Installation

For environments without Docker or Kubernetes, you can use the standalone JAR file.

Prerequisites

Installation Steps

-

Download the latest JAR from the Kestra releases page

-

Make the JAR executable:

- For Linux/MacOS: No changes needed

- For Windows: Rename

kestra-VERSION to kestra-VERSION.bat

-

Run Kestra in local mode:

1

2

3

4

5

| # Linux/MacOS

./kestra-VERSION server local

# Windows

kestra-VERSION.bat server local

|

- For production, run in standalone mode with a configuration file:

1

| ./kestra-VERSION server standalone --config /path/to/config.yml

|

Systemd Service (Linux)

For Linux servers, you can create a systemd service:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| [Unit]

Description=Kestra Event-Driven Declarative Orchestrator

Documentation=https://kestra.io/docs/

After=network-online.target

[Service]

Type=simple

ExecStart=/bin/sh <PATH_TO_YOUR_KESTRA_JAR>/kestra-<VERSION> server standalone

User=<KESTRA_UNIX_USER>

Group=<KESTRA_UNIX_GROUP>

RestartSec=5

Restart=always

KillMode=mixed

TimeoutStopSec=150

SuccessExitStatus=143

SyslogIdentifier=kestra

[Install]

WantedBy=multi-user.target

|

🔧 Configuration

Kestra can be configured in several ways:

- Environment Variable: Set the

KESTRA_CONFIGURATION environment variable with YAML content

- Configuration File: Use the

--config option to specify a configuration file

- Default Location: Place a config file at

${HOME}/.kestra/config.yml

Basic Configuration Example

1

2

3

4

5

6

7

8

9

10

11

12

13

| kestra:

repository:

type: postgres

queue:

type: postgres

storage:

type: local

local:

baseDirectory: "/tmp/storage"

postgres:

url: jdbc:postgresql://localhost:5432/kestra

user: kestra

password: k3str4

|

Advanced Configuration

Using Kafka as Queue

1

2

3

4

5

6

7

| kestra:

queue:

type: kafka

kafka:

client:

properties:

bootstrap.servers: "localhost:9092"

|

Configuring Elasticsearch for Indexing

1

2

3

4

5

6

7

8

9

10

11

12

| kestra:

repository:

type: elasticsearch

elasticsearch:

indices:

executions:

index: "kestra_executions"

type: "split_by_month"

flows:

index: "kestra_flows"

templates:

index: "kestra_templates"

|

📋 Workflow Examples

Kestra workflows are defined in YAML format. Let’s look at some examples:

Example 1: Hello World

1

2

3

4

5

6

7

| id: hello_world

namespace: dev

tasks:

- id: say_hello

type: io.kestra.plugin.core.log.Log

message: "Hello, World!"

|

Example 2: Scheduled Workflow

1

2

3

4

5

6

7

8

9

10

11

12

| id: scheduled_hello

namespace: dev

triggers:

- id: schedule

type: io.kestra.core.models.triggers.types.Schedule

cron: "0 * * * *" # Run every hour

tasks:

- id: say_hello

type: io.kestra.plugin.core.log.Log

message: "Hello, it's time for a scheduled task!"

|

Example 3: Data Pipeline with Python

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| id: python_data_pipeline

namespace: dev

tasks:

- id: fetch_data

type: io.kestra.plugin.core.http.Request

uri: https://api.example.com/data

method: GET

headers:

Content-Type: application/json

- id: process_data

type: io.kestra.plugin.scripts.python.Script

script: |

import json

import pandas as pd

# Get data from previous task

data = json.loads(inputs['fetch_data']['body'])

# Process with pandas

df = pd.DataFrame(data)

result = df.groupby('category').sum().to_json()

# Output results

outputs = {"processed_data": result}

beforeCommands:

- pip install pandas

|

Example 4: Parallel Tasks

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| id: parallel_workflow

namespace: dev

tasks:

- id: start

type: io.kestra.plugin.core.log.Log

message: "Starting parallel tasks"

- id: parallel

type: io.kestra.core.tasks.flows.Parallel

tasks:

- id: task1

type: io.kestra.plugin.core.log.Log

message: "Running task 1"

- id: task2

type: io.kestra.plugin.core.log.Log

message: "Running task 2"

- id: end

type: io.kestra.plugin.core.log.Log

message: "All parallel tasks completed"

|

Example 5: Error Handling

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| id: error_handling

namespace: dev

tasks:

- id: might_fail

type: io.kestra.plugin.scripts.shell.Commands

commands:

- exit 1 # This will fail

timeout: PT10S

retry:

maxAttempt: 3

delay: PT1S

errors:

- id: handle_failure

type: io.kestra.plugin.core.log.Log

message: "Task failed, but we're handling it gracefully"

|

🔌 Plugins and Extensions

Kestra’s functionality can be extended through plugins. Here are some popular plugins:

Database Plugins

- PostgreSQL:

io.kestra.plugin:plugin-jdbc-postgresql

- MySQL:

io.kestra.plugin:plugin-jdbc-mysql

- SQL Server:

io.kestra.plugin:plugin-jdbc-sqlserver

Cloud Provider Plugins

- AWS:

io.kestra.plugin:plugin-aws

- GCP:

io.kestra.plugin:plugin-gcp

- Azure:

io.kestra.plugin:plugin-azure

Scripting Plugins

- Python:

io.kestra.plugin:plugin-script-python

- Node.js:

io.kestra.plugin:plugin-script-node

- Shell:

io.kestra.plugin:plugin-script-shell

Installing Plugins

For Docker installations, use a custom Dockerfile:

1

2

| FROM kestra/kestra:latest

RUN kestra plugins install io.kestra.plugin:plugin-script-python:LATEST

|

For standalone installations:

1

| ./kestra-VERSION plugins install io.kestra.plugin:plugin-script-python:LATEST

|

🔒 Security and Best Practices

Managing Secrets

Kestra provides several ways to manage secrets:

Environment Variables

Create an .env file with secrets prefixed with SECRET_:

1

| SECRET_API_KEY=base64_encoded_value

|

Reference in workflows:

1

2

3

4

5

6

| tasks:

- id: api_call

type: io.kestra.plugin.core.http.Request

uri: https://api.example.com

headers:

Authorization: "Bearer {{ secret('API_KEY') }}"

|

Namespace Secrets (Enterprise Edition)

In the Enterprise Edition, you can manage secrets at the namespace level with inheritance.

Production Recommendations

- Use a proper database: PostgreSQL is recommended for production

- Enable authentication: Configure OIDC or LDAP authentication

- Regular backups: Back up your database and configuration

- Monitoring: Set up monitoring for Kestra services

- Resource allocation: Allocate appropriate resources based on workload

🔍 Troubleshooting

Common Issues

Docker in Docker (DinD) Issues

Some Kubernetes environments have restrictions on DinD. For non-rootless DinD:

1

2

3

4

5

6

| dind:

image:

image: docker

tag: dind

args:

- --log-level=fatal

|

Database Connection Issues

Check your database connection string and credentials. For PostgreSQL:

1

2

3

4

5

| kestra:

postgres:

url: jdbc:postgresql://localhost:5432/kestra

user: kestra

password: k3str4

|

Memory Issues

Limit message size to prevent memory problems:

1

2

3

| kestra:

server:

messageMaxSize: 1048576 # 1MiB

|

📚 References and Resources

🎓 Conclusion

Kestra is a powerful, flexible orchestration platform that can handle everything from simple scheduled tasks to complex data pipelines. With its declarative YAML-based approach, intuitive UI, and rich plugin ecosystem, it provides a modern solution for workflow automation needs.

Whether you’re a data engineer, software developer, or platform engineer, Kestra offers the tools you need to automate and orchestrate your workflows efficiently.